How LLMs “Remember”

Context windows, reflection loops, and vector memory for practical agents.

1️⃣ Overview: How LLMs “Remember”

LLMs don’t have permanent memory — they only see what’s in the context window. So if you want reflection or learning, you need an external memory store to hold experiences and recall them later.

That’s where vector DBs like Chroma, Milvus, or BilberryDB come in — they’re used as long-term associative memory.

2️⃣ Mapping Memory Types to Storage

| Human Memory | Agentic Equivalent | Stored In | Purpose |

|---|---|---|---|

| Semantic memory | The LLM’s pretrained weights | — (in-model) | Static knowledge learned from training corpus |

| Episodic memory | Past experiences, reflections, outcomes | Vector DB (e.g., Chroma, Milvus, BilberryDB) | Retrieve “lessons” or “context” relevant to new tasks |

| Procedural memory | Patterns for reasoning, reflection, or acting | Prompt scaffolds / tool logic | Encoded as reusable routines (“think → act → reflect”) |

For reflection loops, episodic memory is the dynamic one — it grows and evolves with each task.

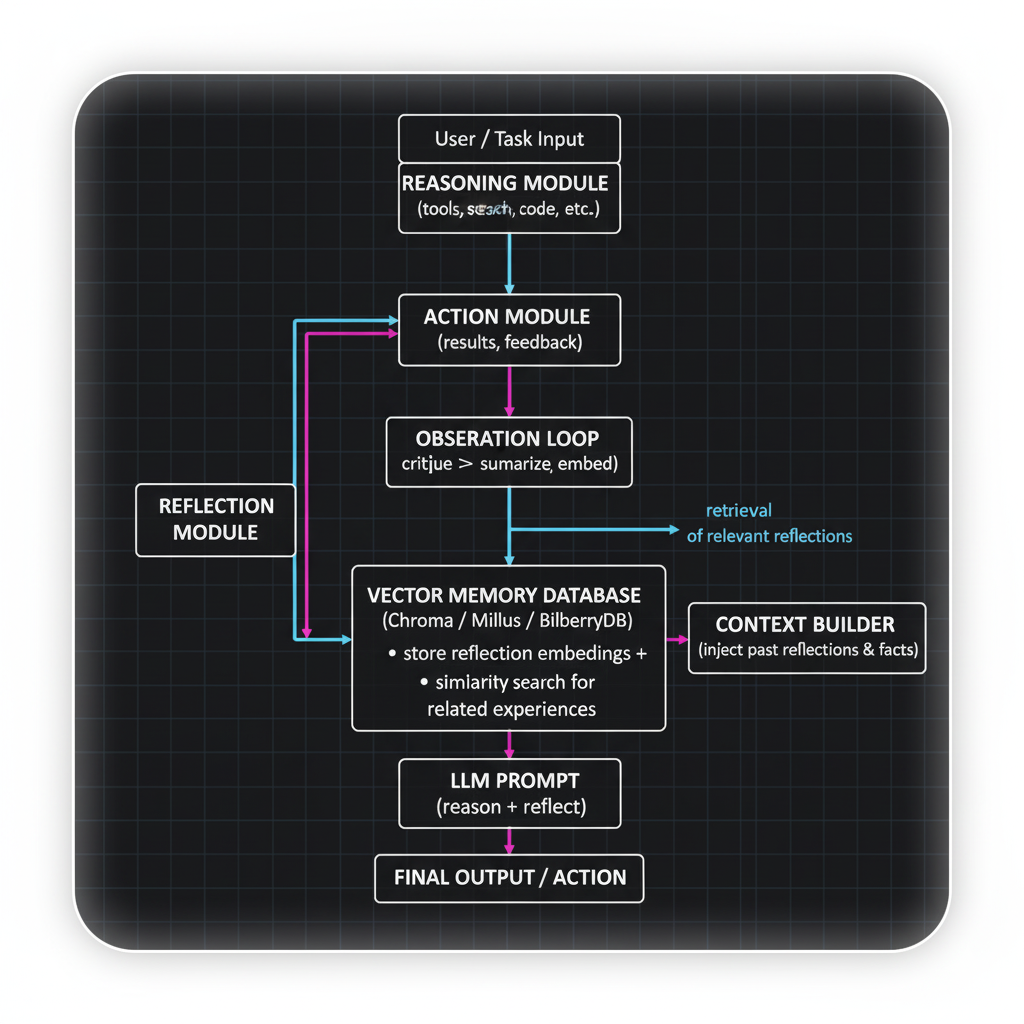

3️⃣ How It Works (Step by Step)

Here’s a typical flow for a ReAct + Reflexion agent using a vector DB:

- Experience: The agent completes a task and generates reflections: “Lesson: Verify sources before finalizing summaries.”

- Embed Reflection: Convert that reflection into a vector with an embedding model (e.g.,

text-embedding-3-small). - Store in Vector DB: Save the vector with metadata (task ID, timestamp, tags like “summarization,” “verification”).

- Query Later: Before a new task, query for reflections most similar to the current context.

- Condition Prompt: Inject retrieved reflections into the model’s context to guide reasoning.

That’s how agents “remember” past reasoning — and how reflection loops stay grounded in experience.

4️⃣ Differences Between Chroma, Milvus, BilberryDB

| DB | Strength | Best Use |

|---|---|---|

| Chroma | Simple, local, great for prototypes | Single-agent reflection storage or desktop tools |

| Milvus | Distributed, scalable | Multi-agent systems or long-term learning environments |

| BilberryDB | Memory-native LLM integration (recent) | Agents needing recall and reasoning synchronization |

In short:

- Use Chroma to learn the concept.

- Milvus when scaling persistent agent memory.

- BilberryDB to experiment with higher-level, cognitive-style memory.

┌────────────────────────────┐

│ User / Task Input │

└────────────┬───────────────┘

│

▼

┌────────────────────┐

│ REASONING MODULE │

│ (LLM, CoT, ReAct) │

└────────────────────┘

│

▼

┌────────────────────┐

│ ACTION MODULE │

│ (tools, search, │

│ code, etc.) │

└────────────────────┘

│

▼

┌────────────────────┐

│ OBSERVATION LOOP │

│ (results, feedback)│

└────────────────────┘

│

▼

┌────────────────────────────────┐

│ REFLECTION MODULE │

│ critique → summarize → embed │

└────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────┐

│ VECTOR MEMORY DATABASE │

│ (Chroma / Milvus / BilberryDB) │

│ • store reflection embeddings + metadata │

│ • similarity search for related experiences │

└─────────────────────────────────────────────────┘

▲

│

retrieval of relevant reflections

│

▼

┌────────────────────┐

│ CONTEXT BUILDER │

│ (inject past │

│ reflections & facts│

└────────────────────┘

│

▼

┌────────────────────┐

│ LLM PROMPT │

│ (reason + reflect)│

└────────────────────┘

│

▼

┌────────────────────────────┐

│ FINAL OUTPUT / ACTION │

└────────────────────────────┘Graphic: you mentioned you have an image ready — we can place it above or below the diagram when publishing.