Why We Still Need Traditional Classification Models in the Age of LLMs

A technical-but-approachable deep dive using real code from a multi-model vision pipeline.

Introduction

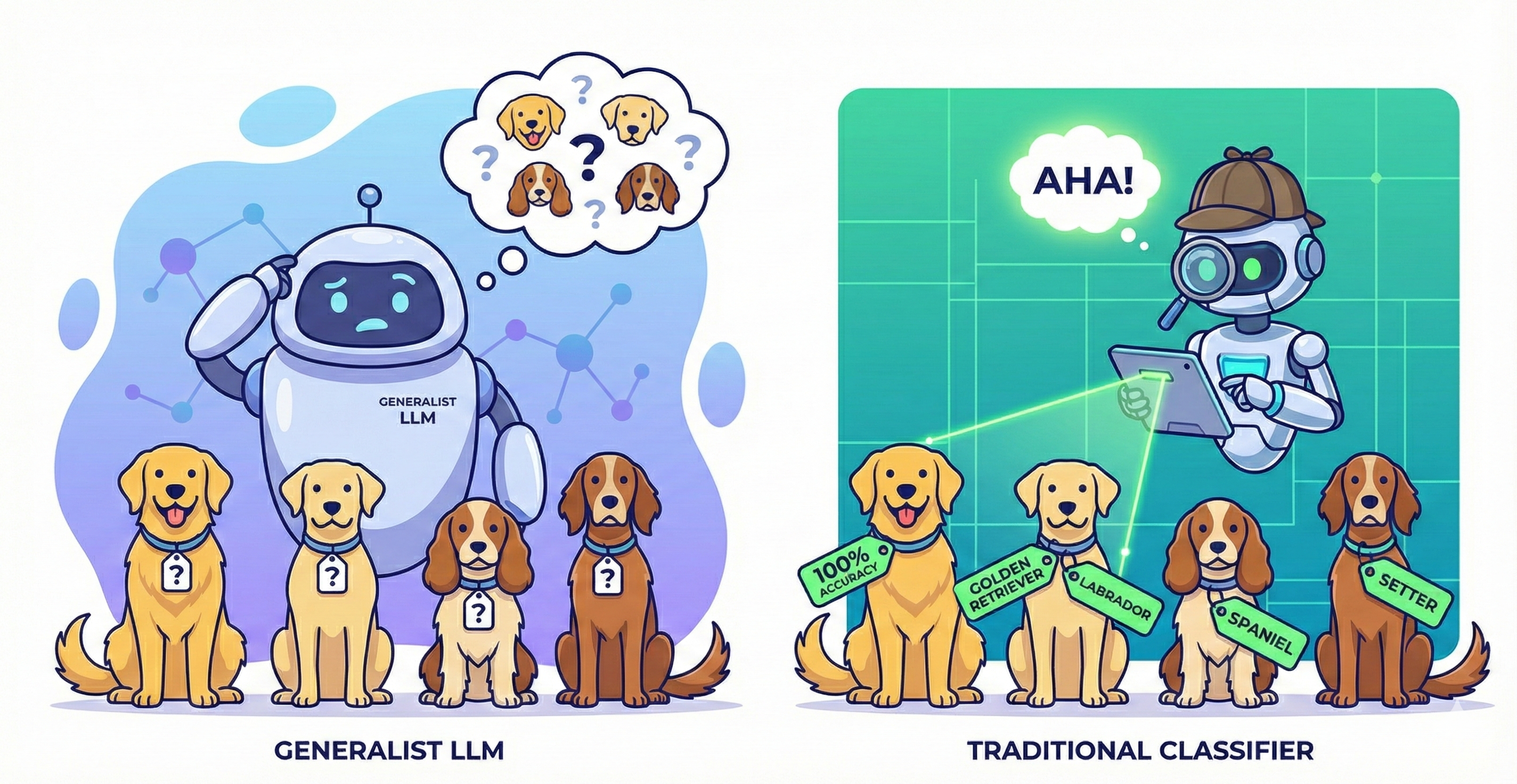

We live in the era of Large Language Models (LLMs) — systems capable of answering questions, generating code, summarizing documents, and even reasoning about visual inputs. Because of this, many teams assume traditional machine learning models (like classifiers and detectors) are obsolete.

But here's the truth:

LLMs are generalists; traditional models are specialists. You still need both.

In the same way a highly educated person may know a little about everything but not specialize in veterinary medicine, a general-purpose LLM can describe an image — but it cannot reliably identify 120 dog breeds or determine the health of coral reefs with scientific consistency.

This blog post introduces why classification models still matter, using a real-world example from my vision processing pipeline:

- YOLOv8 (object detection)

- Dog breed classifier

- Cat breed classifier

- Wildlife classifier (iNaturalist)

- Scene classifier (Places365)

- Coral classifier (SigLIP model)

- CLIP for image-level + crop-level embeddings

This approach forms the foundation of my "animal vs. people" processing stack — but the exact same architecture works for people, plants, products, or anything else you care about.

Why LLMs Can't Replace Specialized Models (Yet)

Think of an LLM as a very talented intern: great at adapting, reasoning, and improvising — but not great at highly consistent, domain-specific tasks where precision matters.

Analogies help here:

- YOLO is a police K9 trained to detect a specific scent.

- A dog-breed classifier is a world-class veterinarian specializing in one domain.

- LLMs are charismatic generalists capable of giving "pretty good" answers about everything — but rarely perfect at niche tasks.

That's why modern vision systems increasingly look like orchestration layers, where many narrow models assist a few broad ones. LLMs thrive when fed clean, structured signals — and those signals come from traditional models.

The Architecture: A Multi-Model Vision Worker

Below is an excerpt from an actual production vision worker Lambda responsible for:

- Downloading an image

- Detecting dogs/cats/people

- Refining classification via multiple specialized models

- Generating CLIP embeddings for full images + crops

- Storing everything in vector databases (Qdrant)

- Sending structured metadata to downstream systems

All using open-source models that anyone can use.

Step 1: General Detection with YOLOv8

LLMs could analyze pixels, but YOLO has been optimized for this single job for a decade.

def _detect_entities(img):

model = _ensure_yolo_loaded()

results = model(np.asarray(img))[0]

...YOLO gives us bounding boxes for people, dogs, cats, sheep, goats, cows, horses, deer.

But YOLO does not tell us what kind of dog. Or whether a "goat" is actually a misdetected dog (common).

Step 2: Refining Animal Identification

Here is where traditional classification models shine.

🐶 Dog Breed Classification

Model: prithivMLmods/Dog-Breed-120 (SigLIP-based)

breed_info = _classify_dog_breed(crop)If YOLO says "goat" — but the dog classifier says "Golden Retriever" with 0.92 confidence? We override YOLO.

🐱 Cat Breed Classification

Model: Custom or microsoft/resnet-50 finetuned for cats

breed_info = _classify_cat_breed(crop)🐾 Wildlife Species (Non-Dog/Cat)

Model: bryanzhou008/vit-base-patch16-224-in21k-finetuned-inaturalist

This can recognize deer, sheep, goats, horses, and hundreds of species.

Specialized knowledge like this is far outside what LLMs can reliably infer from raw pixels.

Step 3: Scene Classification

Model: Places365 (MIT CSAIL)

scene_info = _classify_scene(img)This gives us context like "living room", "forest path", "dog park", "pasture", "city street", etc.

LLMs could guess from a description, but Places365 was trained explicitly for this.

Often, the combination of scene + entities gives us richer downstream reasoning.

Step 4: Coral Health Example (Optional)

Model: prithivMLmods/Coral-Health

Detects: healthy coral, bleached coral.

Again, something too specialized for an LLM alone. This optional module demonstrates how flexible the pipeline is for biological/ecological domains.

Step 5: CLIP Embeddings for Search + Similarity

Once we've detected entities, we compute embeddings.

Full Image Embedding

image_embedding = _compute_clip_embedding(img)Crop-Level Embeddings

crop_embedding = _compute_clip_embedding(crop)These 512‑dimensional vectors power reverse image search, similarity lookups, clustering, and retrieval for LLMs (RAG).

LLMs excel once they have structured context — embeddings turn pixels into usable signals.

Why Not Use GPT‑4 Vision for Everything?

Two reasons:

1. Consistency

Specialized models give deterministic outputs on the same image. LLMs do not.

2. Traceability

A wildlife classifier with a published dataset is auditable. An LLM hallucinating "this looks like a goat" is not.

3. Latency & Cost

Running YOLO + small classifiers is far cheaper and faster than sending every image to a large vision-capable LLM.

4. Accuracy on Niche Domains

LLMs are "broadly capable" — but rarely state‑of‑the‑art in specialty tasks. Specialization matters.

The Orchestration Pattern (LLMs as the Final Layer)

The real magic happens when you combine:

- Detection → what's in the image

- Classification → specialized understanding

- Embeddings → vector search + memory

- LLMs → reasoning based on structured data

LLMs become much more accurate when fed structured inputs like:

{

"animals": [

{

"type": "dog",

"breed": "Golden Retriever",

"confidence": 0.91

}

],

"scene": "park",

"has_person": false,

"brightness": 0.78

}Instead of raw pixel data.

This stack is not "LLM vs ML models." It's LLMs + ML models working together.

Real-World Example: My Animal-Focused Pipeline

At the end of a single image analysis run, I store:

- full-image embedding

- multiple crop embeddings

- detection metadata

- refined classifications

- scene data

- coral/wildlife notes

- brightness/orientation

Everything goes into Qdrant, enabling fast similarity search, retrieval-augmented generation, and filtering ("show me bright images of dogs in parks").

This architecture is general-purpose: Animals today — people, plants, products tomorrow.

Why This Matters for Your AI Strategy

If you are building vision systems:

- Don't rely on LLMs alone.

- Don't rely on single-model pipelines.

- Don't assume a bigger model solves every problem.

Instead:

Use the right tool for the job — orchestrate many small models with one large one.

This is the future of practical AI systems.

Coming Next in This Series

This blog is the first in a multi-part series:

- Why LLMs Still Need Traditional Classifiers (this post)

- How to Build a Multi-Model Vision Pipeline

- Building "Animal vs. People" Classification Systems

- How to Store Embeddings in Vector Databases (Qdrant Edition)

- How to Combine LLMs with Structured Vision Data (RAG for Images)